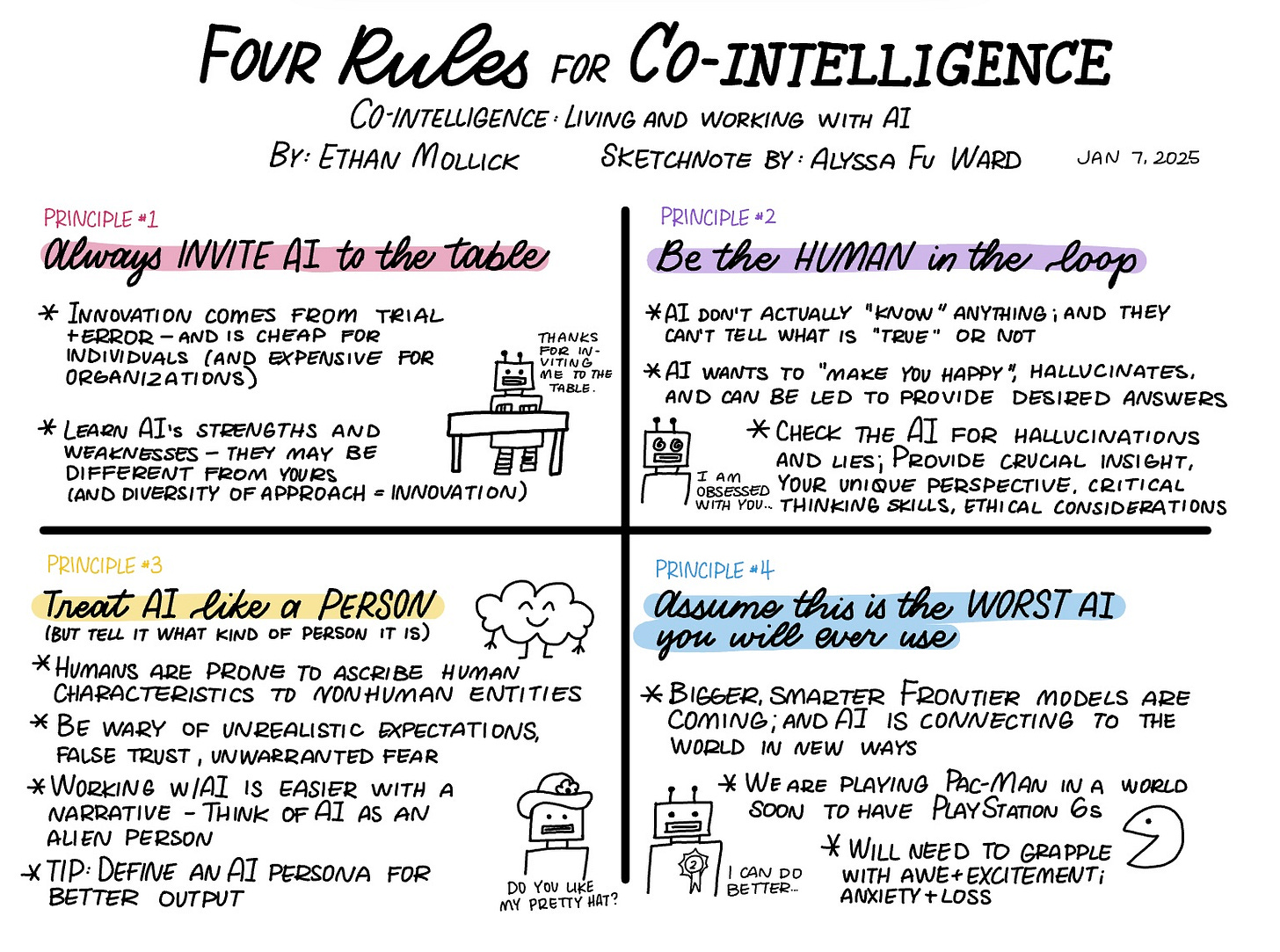

Sketchnote: A summary of Ethan Mollick’s four rules for living and working with AI

As the landscape of AI continues to rapidly evolve, these principles offer flexible, optimistic and concrete guidance for how to navigate that landscape

Throw a metaphorical stone into the ether, and you’d be hard-pressed not to hit some mention of AI. You don’t have to be an engineer training cutting-edge models to be exposed to AI. In fact, there is an increasing call for any person to use AI, at the least to improve their day-to-day activities and at the most to preserve their jobs.

As a data scientist and social psychologist, I often find myself torn between cautious optimism and skepticism about what AI will mean for the future. I recently read the book Co-Intelligence: Living and Working with AI by Ethan Mollick. I found his book to balance between the promise, excitement, and beneficial outputs from using AI, while also honestly signaling the dangers of AI, ways it can lead humans astray, and sometimes even going as far as describing apocalyptic dystopian futures (yikes).

In Chapter 3, he offers four principles on how we can approach AI—whether in the workplace or everyday life—with an open mind and a healthy dose of realism. I summarize them in the sketchnote above and describe some of my reflections on them in the post below.

Principle 1: Always invite AI to the table

There is so much skepticism about the capabilities of AI, and that skepticism is justified. There are countless stories about how AI provides wrong answers and hallucinates responses. I appreciate that this principle is about an invitation not about blind trust. It speaks to how we can’t learn from, adapt to, and improve AI capabilities unless we are open to using it in the first place.

One point that I thought was particularly good contrasted the pace of innovation for organizations versus for individuals. It’s expensive for organizations to rollout AI programs and experiment with the benefits of AI. For the everyday employee sitting at her desk doing her normal job, however, it takes nearly no effort to test out AI and seamlessly use and test it like any other new tool she wants to add to her toolbox. It’s in this space of individual testing that innovation can take off.

Principle 2: Be the human in the loop

Because of the power of AI to aggregate and build off of a vast pool of knowledge, it’s easy to fall into a trap of believing it to have an authority it doesn’t actually have. The advantage of being human is that we have knowledge about how the world works and are the source that is creating new connections that AI will have to be continually trained on to keep up-to-date itself. But the human has to be active when working with AI - to question what the output is and do her due diligence to cross-check the responses, just as she would with any other source of information she is interacting with.

Principle 3: Treat AI like a person

Humans are scary good at anthropomorphizing non-human entities. I remember learning this as an undergrad where study participants would play a game called Cyberball with a random computer program. The computer partner was programmed to either pass the ball to the human an equal number of times or to ignore the human and pass the ball to a third partner a majority of the time. The results of the study showed that the human felt hurt, angry, and ostracized by the computer program, even though it has no intention.

This is the same with AI, and even more so, because the AI has human ticks that are coded as showing empathy, compassion, and understanding. My interpretation of Ethan Mollick’s argument is to lean into that. Use that tendency to characterize AI as human, first to understand a very complex set of patterns and responses that is likened to the complex patterns and responses that a human provides. But also as a way to get the AI to provide more relevant answers. If you provide the AI a persona, it will narrow the scope of connections it will use to provide answers, which will then be more tailored to the response you’re looking for.

Principle 4: Assume this is the worst AI you will ever use

I don’t know why, but this idea tickles my brain so much. Maybe it’s because it taps into my delight related to growth. If you read my post on my art recap for 2024, I wrote about my art process and how one thing I love about it is if I keep learning and practicing, then my worst art will be behind me.

The world of AI is growing quickly, and most efforts around AI are related to improving the models. You don’t have to be the engineer directly training new models, but you can benefit from the improved models. This principle in combination with the others will set you up to build up the AI muscle, and your ability to use AI will improve as AI improves.

How can you use AI?

As the landscape of AI continues to rapidly evolve, these principles offer flexible, optimistic and concrete guidance for how to navigate that landscape. Whether you are using AI for work or life, these guidelines can help you use AI more effectively.

How can you start applying these principles in your daily life? I’d love to know!