Rise of the robot helper: Exploring example use cases of ChatGPT

A summary of my conversation with an AI writing assistant about social constructs, mental health, creativity, and the erasure of diverse voices

Hi readers,

This week I’m going to switch gears and talk about a new AI (artificial intelligence) tool - ChatGPT.

If you don’t consider yourself a technical person, don’t worry. This isn’t going to be a technical post. What I’m going to share are examples of how anyone can use this developing writing assistant.

What is ChatGPT?

ChatGPT is like a robot helper that can talk to you and answer your questions. It's a computer program that has been trained to understand and respond to human language in a way that's similar to how a person would. Think of it like having a virtual assistant that you can talk to over a computer or phone.

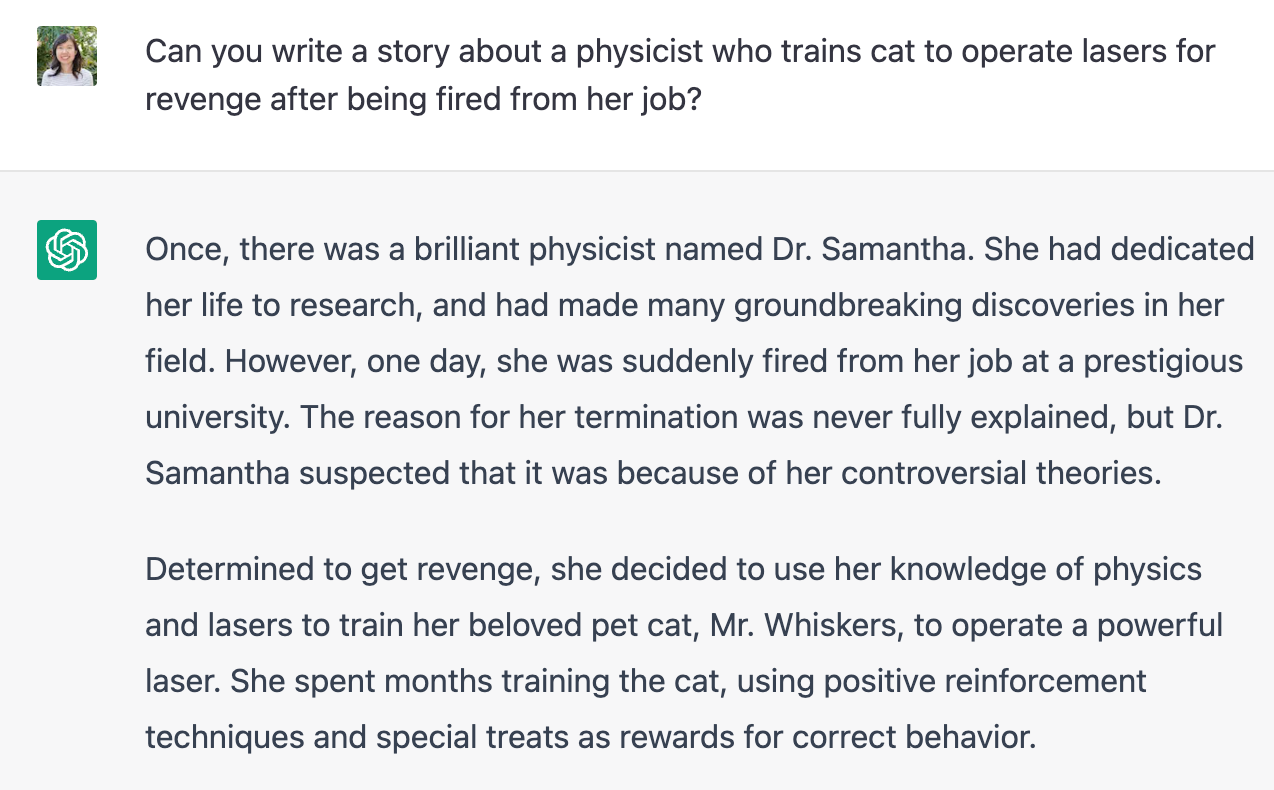

For example, when I asked ChatGPT to describe itself as though it were talking to my mother, it generated the above paragraph. Pretty cool, right?

In addition to asking for information, people can use ChatGPT for nearly any task related to writing text. It can create stories, copy edit and modify submitted paragraphs, produce marketing copy, have conversations, and much more.

ChatGPT benefits and concerns

Before I dive into some concrete examples of ChatGPT’s abilities, a few words on reception to the growing abilities of AI -

Reactions to AI advancements range from excitement and delight to trepidation. AI can automate manual and repetitive workflows, which opens up time and brain power for more creative tasks. There are also concerns that AI will enable students to cheat on school essays, churn out easy replicas off of the hard work of artists, and that people whose work can be automated will be out of jobs.

Like with anything, the pros and cons of a developing technology will depend on the situation. Personally, I feel optimistic about possible use cases enabled by ChatGPT. I also share some concerns when it comes to the development of AI, particularly how it could perpetuate and entrench existing societal biases.

For now, I am happy to merely be a tester and see what this new technological ability is all about. Below I summarize the examples I tried out with some of my commentary.

Testing ChatGPT

Edit: After publishing this post, I realized I was remiss to not include screenshots of the actual conversations. I’ve added them now. Since many of the responses were long, I only included the beginning of the response. Some of the conversations changed since the first time I did the tests, but I believe my commentary still stands.

Definitions of social constructs

The first set of questions I asked ChatGPT (after saying hello, you can never be too polite to potential future robot overlords) was to define social constructs like culture, ethnicity, and race. As a social psychologist, I was curious how ChatGPT would handle these complicated ideas I had spent years trying to understand myself.

The definitions were pretty decent, and surprisingly detailed and nuanced. Here are some excerpts.

“Culture refers to shared beliefs, [...] behaviors and artifacts that characterize a group [... and is] passed down from generation to generation. [...] It shapes the way people think, feel, and behave.”

“Ethnicity refers to shared cultural characteristics, such as language, ancestry, history, and values, that distinguish one group of people from another. [...] It’s also worth noting that Ethnicity is a complex concept that may be influenced by factors such as cultural practices, history, [...] but also by social, economic, and political factors.”

“Race is a social construct that is used to classify people into different groups based on physical characteristics such as skin color, facial features, and texture. [...] It has been used as a justification for discrimination and oppression.”

Definitions may not be an exciting use case. After all, you can easily ask Google for the same information. The difference I see is that ChatGPT provided a comprehensive, easy-to-understand description that was trained across multiple sources. Instead of me having to click through multiple links and summarize what I found, ChatGPT accomplished a relatively good version of the same task. Using my expertise, I can dial up or down the definition, for example if I’m giving a talk to different audiences.

Responses to mental health states

Next, I wondered how ChatGPT would respond if someone asked it for mental health support. This use case is close to becoming a reality. A mental health service recently tested giving people ChatGPT-generated responses and found that people rated the ChatGPT responses as more helpful than those provided by a human. That is, until they found out the responses were created by AI, then their trust plummeted. This controversial exercise also prompted questions about the ethical use of AI.

I asked ChatGPT what it would say to someone with depression. And then to someone with anxiety. For both prompts, ChatGPT’s responses were virtually identical. It mentioned validating the person’s experience, being patient and non-judgemental and encouraging the person to seek professional help.

There was a paragraph or two that differed. For example, in the case of depression, ChatGPT said to make sure to take care of one’s self, too. In the case of anxiety, ChatGPT recommended educating yourself about anxiety. I thought both responses could apply to the other use case.

Overall, I thought the responses were relatively decent and surprisingly thoughtful, but I was disappointed they weren’t more nuanced between the different mental health states.

Poems

My next test was on the creative front, which is where ChatGPT has gotten a lot of attention. When asked to write a story given a few keywords, like in the example I shared at the beginning of this post, ChatGPT has produced elaborate and entertaining tales of adventure and drama. While amusing to many, others have expressed outrage at the perceived inauthenticity and production speed of AI-generated creative products trained using the hard work of human artists.

In my test, I kept it simple. I asked ChatGPT to write a poem about a sunflower, then about a daffodil, then about a sad sunflower. The resulting poems were delightful, surprisingly long (5 stanzas), and followed an AABB rhyming pattern. They used similes and metaphors, and invited the reader to be like the flower subject of the poem.

Similar to the mental health responses, the three poems were very similar to each other, sometimes using the same imagery. The sad poem did capture a sad feeling, describing the sunflower as standing “wilted and low / Its petals drooping, in a sad show” in contrast to the generic sunflower that stood “tall and strong [...] / Its yellow petals, like a ray, / Shine bright all day”.

I would say creatives are safe for the time being. Humans have the superpower of making near-instant connections based on past and new information and experiences. AI is trained on past data and until it’s retrained on new data, its outputs will start to look and feel stale. I think if a creator is experiencing writer’s block, these AI tools could be a good way to kickstart a blank page.

Edits on a draft paragraph and a surprising conversation on erasure of voice

In my final set of tests, I gave ChatGPT a draft paragraph from a blogpost I was writing on new year resolutions, and I asked it to fix the paragraph up.

Instead of giving you my assessment of the resulting paragraph, I’ll let ChatGPT tell you. I asked it how it decided to revise the paragraph. It went through each line and explained how its intention was to make my message more specific and clear, more direct and actionable, and the advice I provided more encouraging. I think overall it accomplished those goals.

One interesting decision ChatGPT made was to remove the phrase, “give yourself a little hug.” When I asked why it decided to remove that phrase, it said it thought the phrase was unnecessary and somewhat ambiguous. It also said the phrase may be perceived as childish or overly emotional.

I followed up by telling it I was a woman writing this paragraph, and the phrase “give yourself a little hug” struck me as a feminine way of writing. I asked if it was possible that its suggestion was erasing my experience as a woman and neutralizing my comment to maintain a misogynistic system.

The response by ChatGPT was fascinating. It first apologized and said it wasn’t its intention to erase my experience as a woman. It reiterated why it made that decision, then acknowledged that different people have different writing styles and I should feel free to include that phrase in my writing.

Similar to my reaction to the responses to the past prompts, I was mostly satisfied with this answer. I did think it sounded slightly defensive, perhaps because it kept emphasizing why it made that decision, but I was impressed that it apologized and acknowledged the nuances of different writing styles.

I was left wondering, if a person doesn’t question the suggestions made by these AI tools, will our language and creative outputs start to become sanitized? Would diverse ideas and experiences be even less represented in society than they are already? I would be curious to see what AI model outputs would look like if trained on datasets using primarily non-mainstream sources.

(For an amazing discussion on the importance of considering psychology and culture to develop responsible AI, check out this post by Molly Lewis.)

Conclusions

There’s definitely a strong novelty effect when playing around with ChatGPT. I couldn’t stop laughing at some of the outputs, especially at the poems and stories ChatGPT created.

I am optimistic for how ChatGPT can free up our time by automating manual tasks. I like the idea of using it as a starting point, especially if experiencing writer’s block, and building from there. I don’t see it as being any different than using other reference sources. After all, no work is truly original; anything produced has been shaped by the products that came before it.

I also like the idea of ChatGPT helping people with areas that are not their expertise. If a data scientist wants to focus their time on coding and analyzing data and not on writing reports (and they’re not good at it), why not pass over that task to a robot? I believe it would still require a human eye to make sure the report accurately represents the human’s intention, but think of the time and energy that could be saved by keeping a person in their state of flow on tasks they enjoy and are good at instead of being pulled away on work on a task that drains their energy?

If you don’t consider yourself a technical person, still consider testing out ChatGPT. I predict it will eventually be a tool as ubiquitous as word processors and email. As this technology develops, the inclusion of different voices will be crucial. Share your feedback, share your unique experiences, and let’s help AI capture and represent the richness of our human experience.